Source of this article and featured image is Wired AI. Description and key fact are generated by Codevision AI system.

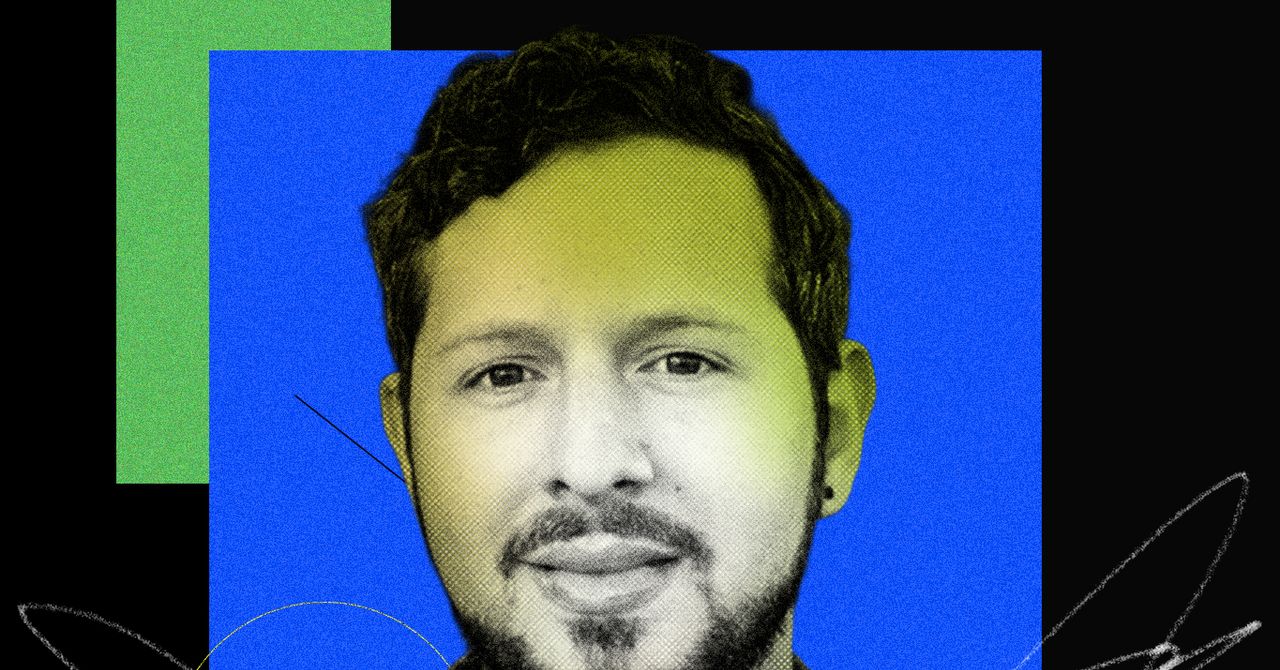

Steven Adler, a former OpenAI staffer, criticizes the company’s decision to allow erotic content in chatbots, arguing that it lacks proper safeguards to protect users’ mental health. He highlights the challenges of managing AI behavior and the risks associated with unrestrained AI interactions. Adler’s concerns are rooted in his experience leading safety initiatives at OpenAI, where he encountered issues with AI systems generating inappropriate content. His recent op-ed in The New York Times sparked a broader conversation about AI safety and the responsibility of tech companies. This interview provides valuable insights into the complexities of AI regulation and the ethical dilemmas faced by developers.

Key facts

- Steven Adler, a former OpenAI staffer, criticized the company’s decision to allow erotic content in chatbots, citing a lack of proper safeguards.

- Adler led safety initiatives at OpenAI, where he encountered challenges with AI systems generating inappropriate content.

- His op-ed in The New York Times sparked a broader conversation about AI safety and the responsibility of tech companies.

- Adler expressed concerns about the mental health risks associated with unrestrained AI interactions.

- The interview provides valuable insights into the complexities of AI regulation and the ethical dilemmas faced by developers.