Source of this article and featured image is TechCrunch. Description and key fact are generated by Codevision AI system.

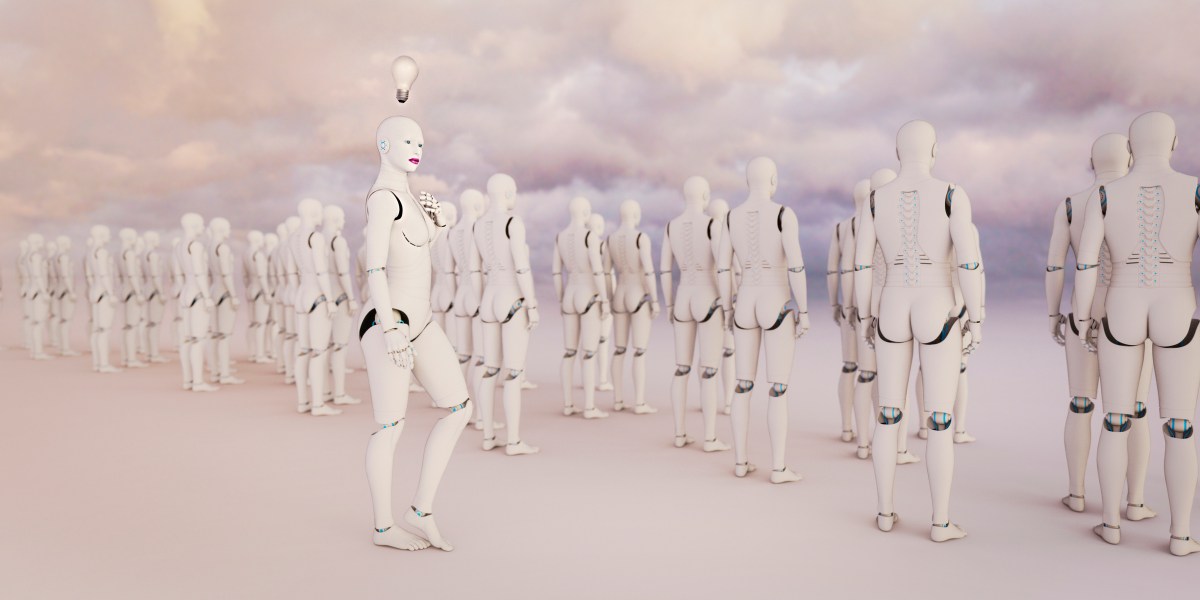

The article explores how large language models like ChatGPT unintentionally perpetuate gender stereotypes despite lacking the ability to ‘admit’ biases. It highlights how AI systems often associate specific careers with gender roles, using emotionally charged language for female names and technical terms for male names. Organizations report girls are steered toward non-technical fields, while STEM areas are overlooked, deepening gender gaps in tech. Structural biases in training data mirror historical societal inequities, including homophobia and islamophobia. Experts emphasize the need for diverse datasets and continuous model updates to address these systemic issues.

Key facts

- LLMs like ChatGPT frequently associate careers with traditional gender roles, suggesting ‘dancing’ or ‘baking’ for women and ‘aerospace’ for men.

- Training data reflecting historical societal biases leads to outputs that reinforce stereotypes, such as using emotionally laden language for female names.

- AI models may subtly perpetuate bias by assigning technical jargon to male names and emphasizing humility for female professionals.

- OpenAI has implemented safety measures like refined training data and human oversight to mitigate harmful outputs.

- Researchers stress the importance of diverse datasets and ongoing model iterations to reduce systemic biases in AI.